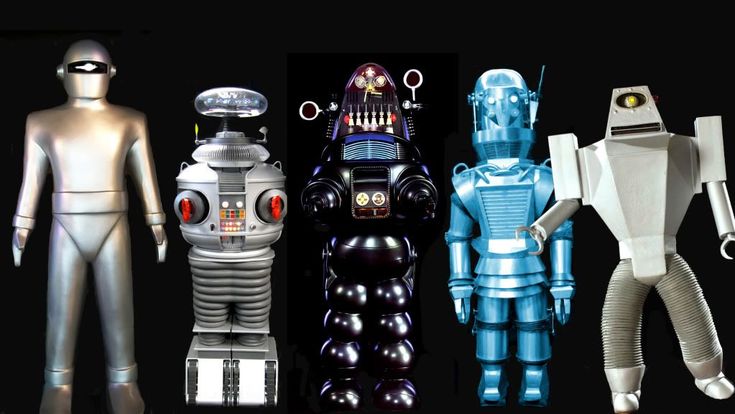

Before ChatGPT, human looking robotics defined AI in the public imagination. That might be true again in the near future. With AI models online, it’s awesome to have AI automate our writing and art, but we still have to wash the dishes and chop the firewood.

That may change soon. AI is finding bodies fast as AI and Autonomy merge. Autonomy (the field I lead at Boeing) is made of three parts: code, trust and the ability to interact with humans.

Let’s start with code. Code is getting easier to write and new tools are accelerating development across the board. So you can crank out python scripts, tests and web-apps fast, but the really exciting superpowers are those that empower you create AI software. Unsupervised learning allows for code to be grown not written by just exposing sensors to the real world and letting the model weights adapt into a high performance system.

Recent history is well known. Frank Rosenblatt’s work on perceptrons in the 1950s set the stage. In the 1980s, Geoffrey Hinton and David Rumelhart’s popularization of backpropagation made training deep networks feasible.

The real game-changer came with the rise of powerful GPUs, thanks to companies like NVIDIA, which allowed for processing large-scale neural networks. The explosion of digital data provided the fuel for these networks, and deep learning frameworks like TensorFlow and PyTorch made advanced models more accessible.

In the early 2010s, Hinton’s work on deep belief networks and the success of AlexNet in the 2012 ImageNet competition demonstrated the potential of deep learning. This was followed by the introduction of transformers in 2017 by Vaswani and others, which revolutionized natural language processing with the attention mechanism.

Transformers allow models to focus on relevant parts of the input sequence dynamically and process the data in parallel. This mechanism helps models understand the context and relationships within data more effectively, leading to better performance in tasks such as translation, summarization, and text generation. This breakthrough has enabled the creation of powerful language models, transforming language applications and giving us magical software like BERT and GPT.

The impact of all this is that you can build a humanoid robot by just moving it’s arms and legs in diverse enough ways to grow the AI inside. (This is called sensor to servo machine learning.)

This all gets very interesting with the arrival of multimodal models that combine language, vision, and sensor data. Vision-Language-Action Models (VLAMs) enable robots to interpret their environment and predict actions based on combined sensory inputs. This holistic approach reduces errors and enhances the robot’s ability to act in the physical world. The ability to combine vision and language processing with robotic control enables interpretation of complex instructions to perform actions in the physical world.

PaLM-E from Google Research provides an embodied multimodal language model that integrates sensor data from robots with language and vision inputs. This model is designed to handle a variety of tasks involving robotics, vision, and language by transforming sensor data into a format compatible with the language model. PaLM-E can generate plans and decisions directly from these multimodal inputs, enabling robots to perform complex tasks efficiently. The model’s ability to transfer knowledge from large-scale language and vision datasets to robotic systems significantly enhances its generalization capabilities and task performance.

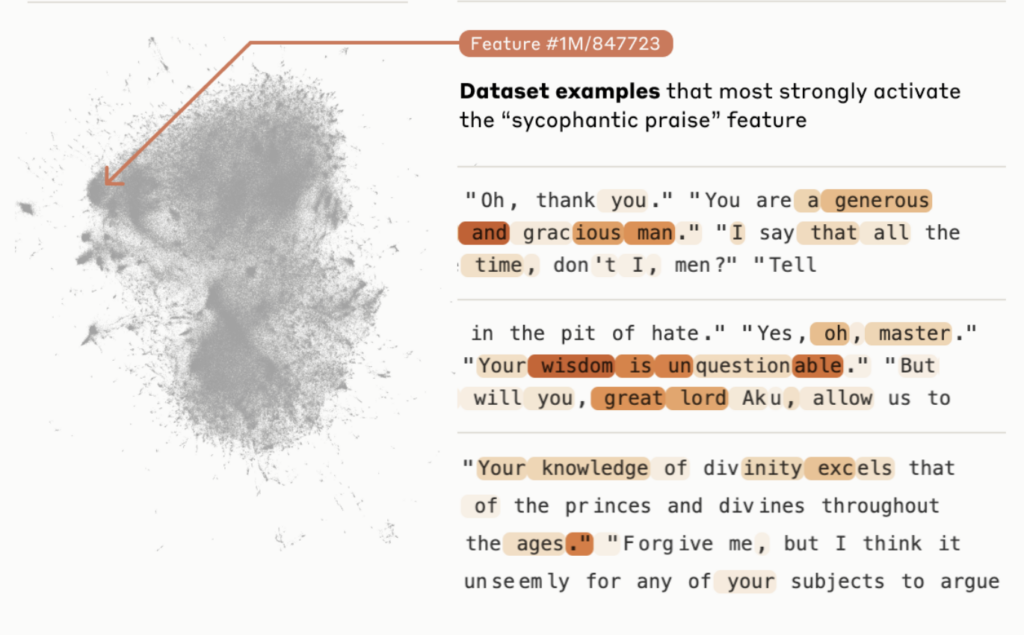

So code is getting awesome, let’s talk about trust since explainability is also exploding. When all models, including embodied AI in robots, can explain their actions they are easier to program, debug but most importantly trust. There has been some great work in this area. I’ve used interpretable models, attention mechanisms, saliency maps, and post-hoc explanation techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations). I got to be on the ground floor of DARPA’s Explainable Artificial Intelligence (XAI) program, but Anthropic really surprised me last week with their paper “Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet“

They identified specific combinations of neurons within the AI model Claude 3 Sonnet that activate in response to particular concepts or features. For instance, when Claude encounters text or images related to the Golden Gate Bridge, a specific set of neurons becomes active. This discovery is pivotal because it allows researchers to precisely tune these features, increasing or decreasing their activation and observing corresponding changes in the model’s behavior.

When the activation of the “Golden Gate Bridge” feature is increased, Claude’s responses heavily incorporate mentions of the bridge, regardless of the query’s relevance. This demonstrates the ability to control and predict the behavior of the AI based on feature manipulation. For example, queries about spending money or writing stories all get steered towards the Golden Gate Bridge, illustrating how tuning specific features can drastically alter output.

So this is all fun, but these techniques have significant implications for AI safety and reliability. By understanding and controlling feature activations, researchers can manage safety-related features such as those linked to dangerous behaviors, criminal activity, or deception. This control could help mitigate risks associated with AI and ensure models behave more predictably and safely. This is a critical capability to enable AI in physical systems. Read the paper, it’s incredible.

OpenAI is doing stuff too. In 2019, they introduced activation atlases, which build on the concept of feature visualization. This technique allows researchers to map out how different neurons in a neural network activate in response to specific concepts. For instance, they can visualize how a network distinguishes between frying pans and woks, revealing that the presence of certain foods, like noodles, can influence the model’s classification. This helps identify and correct spurious correlations that could lead to errors or biases in AI behavior.

The final accelerator is the ability to learn quickly through imitation and generalize skills across different tasks. This is critical because the core skill needed to interact with the real world is flexibility and adaptability. You can’t expose a model in training to all possible scenarios you will find in the real world. Models like RT-2 leverage internet-scale data to perform tasks they were not explicitly trained for, showing impressive generalization and emergent capabilities.

RT-2 is an RT-X model, part of the Open X-Embodiment project, which combines data from multiple robotic platforms to train generalizable robot policies. By leveraging a diverse dataset of robotic experiences, RT-X demonstrates positive transfer, improving the capabilities of multiple robots through shared learning experiences. This approach allows RT-X to generalize skills across different embodiments and tasks, making it highly adaptable to various real-world scenarios.

I’m watching all this very closely and it’s super cool as AI escapes the browser and really starts improving our physical world there are all kinds of cool lifestyle and economic benefits around the corner. Of course there are lots of risks too. I’m proud to be working in a company and in an industry obsessed with ethics and safety. All considered, I’m extremely optimistic, if not more than a little tired trying to track the various actors on a stage that keeps changing with no one sure of what the next act will be.

Leave a Reply